In 2020, we built the maintenance_tasks gem as a solution for performing data migrations in Rails applications. Adopting the gem in Shopify’s core Rails monolith was not so simple, however! We had to adapt the gem to fit Core’s sharded architecture and to handle data migrations across millions of rows. Let’s take a look at how we did it.

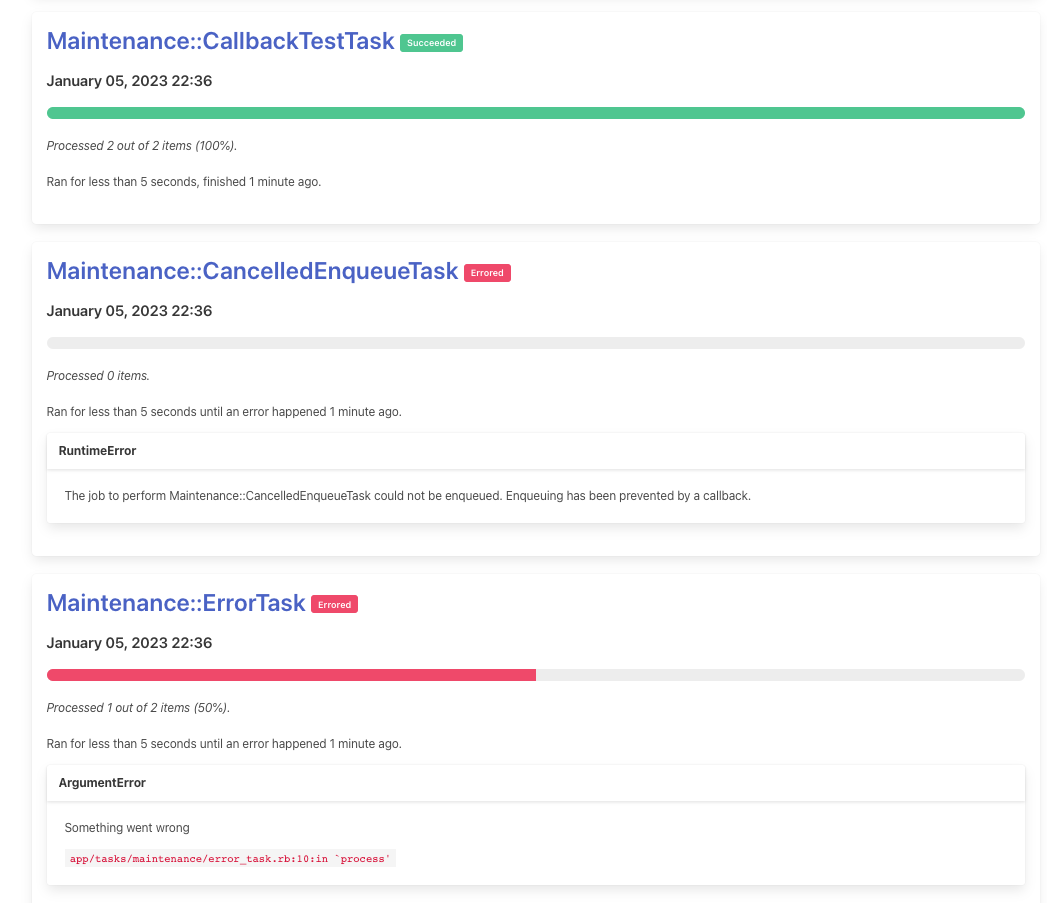

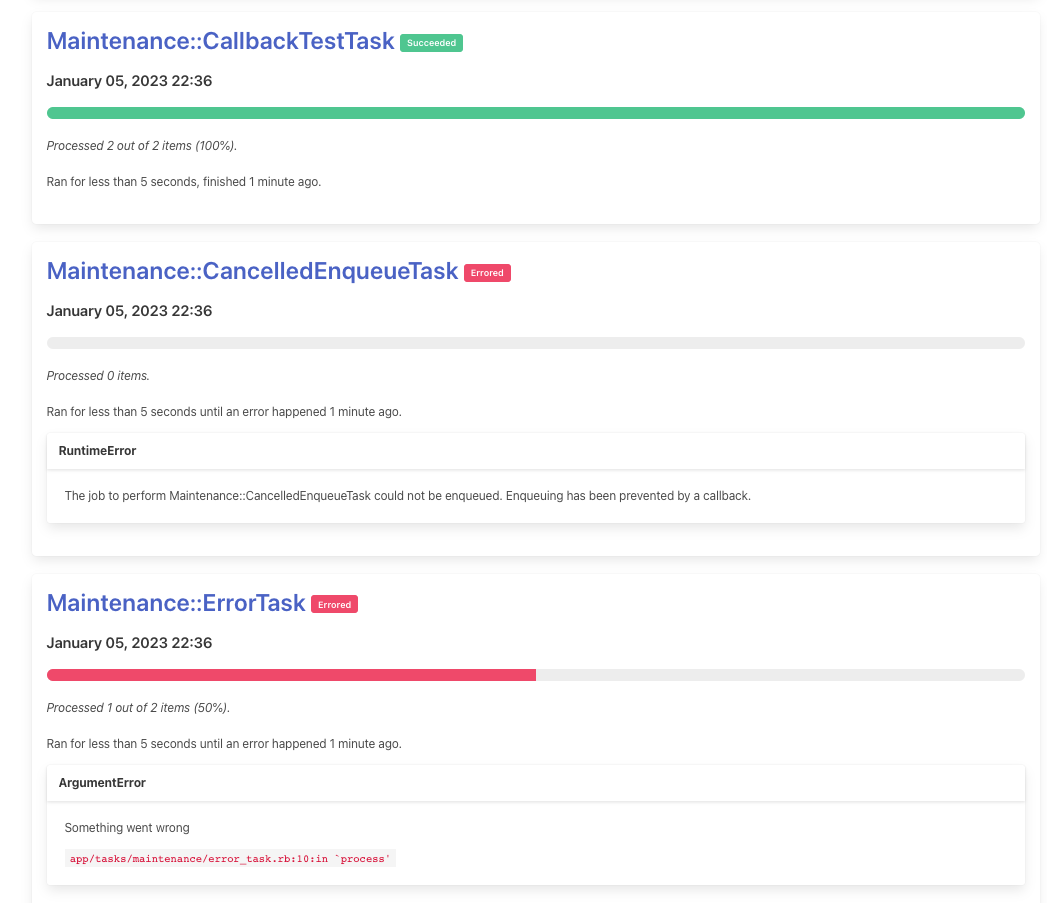

In 2020, we built the maintenance_tasks gem as a solution for performing data migrations in Rails applications. It’s designed as a mountable Rails engine and offers a web UI for queuing and managing maintenance tasks.

The Maintenance Tasks gem is designed as follows:

maintenance_tasks_runs table is used to track information about a running task, including its name, the number

of records that have been processed, the task’s status, etc.Task class. The class must define two methods: #collection and #process.

#collection defines the collection of elements to be processed by the task.#process defines the work to be done by a single iteration of the task on an element from the provided collection.Runner class.TaskJob and creates a Run record. The job iterates over the specified collection and performs the task’s logic.Run record.For most Rails apps, installing maintenance_tasks is as simple as adding the gem and running the install generator

to create the proper database table and mount the engine in config/routes.rb. After successfully releasing v1.0

of the gem, we wanted to adopt it in Shopify’s Core monolith. We had a couple of challenges to overcome:

Our general recommendation for customizing

the job class to be used by gem is to set MaintenanceTasks.job in an initializer, with the specified job inheriting from MaintenanceTasks::TaskJob. In Shopify Core, the bulk of our background job logic is found in a base

ApplicationJob class. We wanted to leverage that job class, so we ended up extracting the bulk of the job logic

in the gem to a module, MaintenanceTasks::TaskJobConcern. We still recommend that Rails apps requiring custom jobs inherit from MaintenanceTasks::Job, an empty class that includes TaskJobConcern, but in our monolith we specified a custom

job class that inherits from ApplicationJob and includes the task job logic via MaintenanceTasks::TaskJobConcern.

The main challenge to tackle in adopting the Maintenance Tasks gem in Shopify Core was getting it to work with the podded architecture of the application. The distinction between the terms “shard” and “pod” are subtle, so let’s clarify the language a bit here:

When we talk about Shopify Core being podded, we’re saying that there are many pods running independent versions of Shopify. Each pod has not only its own database, but also has its own job workers and infrastructure. To run a backfill task against all shops at Shopify, we’d need to kick off a task job on each pod so that rows across each shard would be updated.

Shopify Core also employs a concept called “shard m” or the main pod context. In addition to shop-scoped database shards, we have an “m-shard” database that stores data shared across shops: api permissions, domains, etc.

We determined that the requirements for adopting the gem in Core were as follows:

We decided to expand on the gem’s architecture:

maintenance_tasks_runs table that would track a running task on a given pod.maintenance_tasks_cross_pod_runs table in the m-shard database that would aggregate task run

data across all pods for a given task.We still had a big problem, though: there is a strict boundary between the pod context and the main pod context. Podded jobs cannot be enqueued from the main pod context, because this would mean enqueueing jobs across data centres. We needed a way to queue task jobs across multiple pods, and also a way to have the podded tasks report their progress back to the main pod context in order to aggregate task data across pods. Luckily for us, we were able to leverage tooling that already existed in Shopify’s monolith: the cross pod task framework.

Shopify Core’s CPT framework is a system that can be used to handle queuing and synchronizing work across pods. Here’s a simplified view of how it works:

CrossPodWorkUnit record for each pod that should be targeted. These records are all stored in the

main pod context in a cross_pod_work_units table.#run on every queued work unit. This method performs some logic and then marks the unit as either processing

(meaning that there is more work to be done), or finished.CrossPodWorkUnit subclasses can leverage.To extend Maintenance Tasks to Core, we wrote two new work unit classes: MaintenanceTaskStart handles queueing new

tasks across pods and syncing progress from the pods back to CrossPodRun in the main pod context, and

MaintenanceTaskStop handles pausing or cancelling a task across pods.

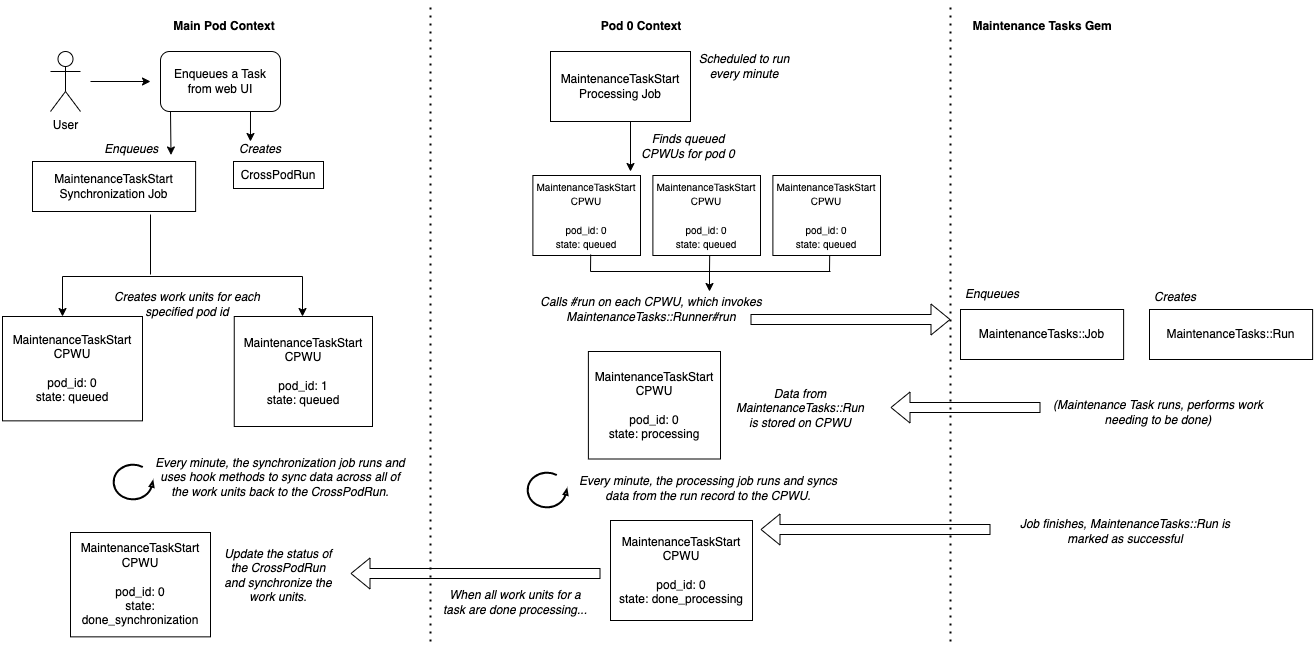

Here’s an overview of how the MaintenanceTaskStart cross pod work unit operates with the maintenance_tasks gem

(you might need to zoom in!):

The process looks something like this:

MaintenanceTaskStart synchronization job is enqueued, and a CrossPodRun record is created to track the task’s

progress, aggregated across all pods (in this case, we have two pods: 0 and 1).MaintenanceTaskStart cross pod work unit (CPWU) for each pod.MaintenanceTaskStart processing job runs and searches for MaintenanceTaskStart CPWUs that are

queued for pod 0.MaintenanceTaskStart#run on each of these work units. Recall that #run is where

the bulk of the work unit’s logic should live.MaintenanceTaskStart#run invokes MaintenanceTasks::Runner#run, which is the gem’s Ruby entrypoint for running

a maintenance task. The gem handles everything as it would with a regular, unsharded Rails application, as described in

A Refresher On Maintenance Tasks.MaintenanceTasks::Run record the gem created are stored

in the cross pod work unit’s attributes.#run

method on them. If the runner has already been invoked for that work unit, #run will synchronize the updated details

from the MaintenanceTasks::Run record to the work unit.MaintenanceTaskStart synchronization job is also kicked off every minute. Its

responsibility is to look at all of the MaintenanceTaskStart work units for a given task, and invoke some behaviour

depending on the state of those work units. If all of the work units for a maintenance task are processing,

the synchronization job will invoke a processing method, which aggregates information across all of the work

units and persists it to the CrossPodRun. For example, the sync job might sum the number of records processed by the

task on each pod, and persist it to the CrossPodRun as the “total number of ticks performed”.MaintenanceTaskStart work unit as soon as a processing job runs.MaintenanceTaskStart work unit identifies that the maintenance task has finished, it marks the work unit as done_processing.CrossPodRun, setting the

ended_at time, etc.Just as with the MaintenanceTaskStart work unit, if a user cancels a task from the web UI, we enqueue a synchronization job that

creates MaintenanceTaskStop work units for each pod. These work units send a #cancel message to the active

MaintenanceTasks::Run for the current pod, which results in the maintenance task stopping (this is all handled by the

gem itself). Note that the MaintenanceTaskStop work unit is only responsible for sending the #cancel message to the

podded run; it is still the MaintenanceTaskStart work unit that will sync information back to the CrossPodRun once

the podded run has been marked as cancelled and the task job has stopped.

As you can see, integrating the Maintenance Tasks gem with Shopify’s Core monolith required some careful thinking.

While we could rely on the gem to do most of the heavy lifting related to running a maintenance task, we had to extend

the system to work in a sharded context and to integrate with the existing background job infrastructure. The concept

of a CrossPodRun was introduced to offer users a snapshot of what was happening with their task, without requiring

them to examine the details of every pod.

The work to adopt maintenance_tasks in Core is broader than what was discussed in this blog (the actual web UI had to

be developed from scratch to factor in sharding!), but the biggest challenge to solve was executing tasks across pods.

Hopefully at this point you have a general idea about how we approached scaling the maintenance_tasks gem to our massive,

sharded Rails monolith.